Programming Exercise 5: Feed Forward Single/Multiple-Hidden Layer Classifier for MNIST Dataset

Python (sklearn-based) implementation that explores how different parameters impact a feed-forward neural network with single/multiple fully-connected hidden layer(s).

A brief analysis of the results is provided in Portuguese. It was submitted as an assignment of a graduate course named Connectionist Artificial Intelligence at UFSC, Brazil.

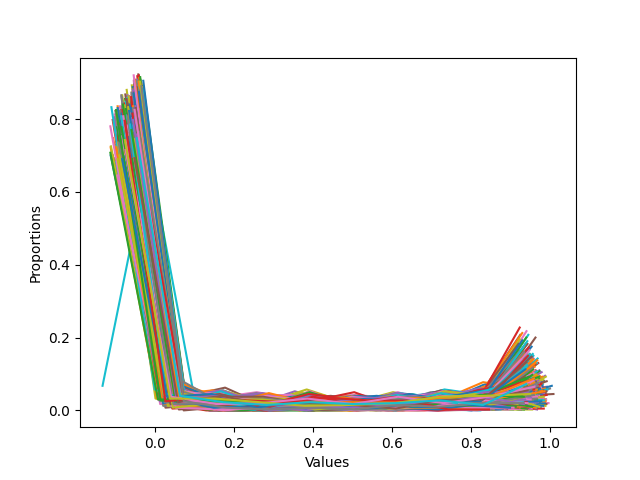

In short, multiple normalization methods are evaluated in a single-layer FFNET for classifying handwritten digits from the MNIST dataset with multiple training algorithms, learning rate (alpha), epochs, and activation functions. Then, the best results are submitted to multiple multi-layer of fully connected perceptrons for comparison.

| Before normalization | MinMax normalization | MaxAbs normalization |

|---|---|---|

|

.png) |

.png) |

| L2 normalization | (x - u) / s normalization | Quantil-Uniform normalization |

.png) |

.png) |

.png) |

| Quantil-Normal normalization | ||

.png) |

Confusion matrix of the experiment with the highest f1-score (0.93) of the multi-layer experiments.

![[[97 1 0 0 0 0 0 2 0 0]

[ 0 94 1 1 0 0 0 2 0 2]

[ 0 3 88 0 5 1 1 1 1 0]

[ 1 0 2 88 1 2 0 0 6 0]

[ 0 1 1 1 93 1 0 2 0 1]

[ 0 1 0 2 0 95 0 1 0 1]

[ 1 0 1 1 1 0 87 1 8 0]

[ 2 1 2 1 0 1 0 92 1 0]

[ 0 0 0 3 0 1 0 2 94 0]

[ 0 0 1 0 0 1 0 0 1 97]]](https://raw.githubusercontent.com/fredericoschardong/MNIST-hyper-parameterization/master/Confusion%20matrix.png)